Best practices for HP EVA, vSphere 4 and Round Robin multi-pathing

The VMware vSphere and the HP EVA 4Ã00, 6Ã00 and 8Ã00 series are ALUA compliant. ALUA compliant means in simple words that it is not needed to manually identify preferred I/O paths between VMware ESX hosts and the storage controllers.

When you create a new Vdisk on the HP EVA the LUN is set default set to No Preference. The No Preference policy means the following:

â On controller failover (owning controller fails), the units are owned by the surviving controller

â On controller failback (previous owning controller returns), the units remain on the surviving controller. No failback occurs unless explicitly triggered.

To get a good distribution between the controllers, the following VDisk policies can be used:

â At presentation, the units are brought online to controller A

â On controller failover, the units are owned by the surviving controller B

â On controller failback, the units are brought online on controller A implicitly

â At presentation, the units are brought online to controller B

â On controller failover, the units are owned by surviving controller A

â On controller failback, the units are brought online on controller B implicitly

In VMware vSphere the Most Recently Used (MRU) and Round Robin (RR) multi-pathing policies are ALUA compliant. Round Robin load balancing is now officially supported. These multi-path policies have the following characteristics:

â Will give preference to an optimal path to the LUN

â When all optimal paths are unavailable, it will use a non-optimal path

â When an optimal path becomes available, it will failover to the optimal

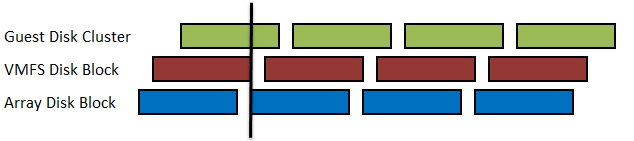

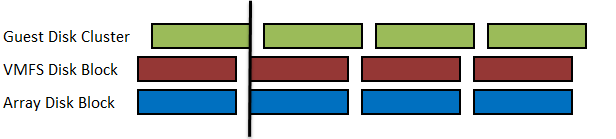

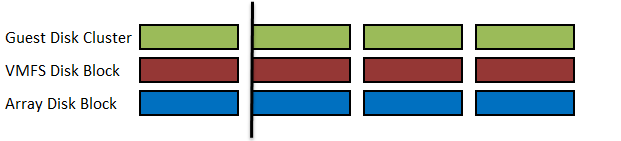

â Although each ESX server may use a different port through the optimal controller to the LUN, only a single controller port is used for LUN access per ESX server

â Will queue I/O to LUNs on all ports of the owning controllers in a round robin fashion providing instant bandwidth improvement

â Will continue queuing I/O in a round robin fashion to optimal controller ports until none are available and will failover to the non-optimal paths

â Once an optimal path returns it will failback to it

â Can be configured to round robin I/O to all controller ports for a LUN by ignoring optimal path preference. (May be suitable for a write intensive environment due to increased controller port bandwidth)

The fixed multi-path policy is not ALUA compliant and therefore not recommend to use.

Another (HP) Best Practice is to set the IOPS (Default the IOPS value is 1000) to a value of 1 for every LUN by using the following command:

do esxcli nmp roundrobin setconfig –type “iops” –iops=1 –device $i ;done

There is a bug when rebooting the VMware ESX server, the IOPS value reverted to a random value.

To check the IOPS values on all LUNs use the following command:

do esxcli nmp roundrobin getconfig –device $i ;done

To solve this IOPS bug, edit the /etc/rc.local file on every VMware ESX host and and add the IOPS=1 command. The rc.local file execute after all init scripts are executed.

The reason for the IOPS=1 recommendation is because during lab tests within HP this setting showed a nice even distribution of IOs through all EVA ports used. If you experiment with this you can see the queue depth for all EVA ports used very much even and also throughput through the various ports. Additionally with the workloads there is a noticeable better overall performance with this setting.

Best practices are not a “one recommendation fits all” cases.